Background and Overview

Artificial intelligence (AI) is rapidly reshaping healthcare systems worldwide, promising to reduce inefficiencies, strengthen clinical decision-making, and improve patient engagement. At its core, AI offers the ability to process vast amounts of data, generate insights in real time, and automate tasks that have traditionally consumed scarce human resources. For health systems under pressure from limited workforce capacity, rising patient demand, and persistent inequities, these capabilities present both hope and challenge.

The applications of AI in health are already diverse, spanning administrative, clinical, and patient-facing domains. In the back office, AI can streamline documentation, claims processing, and supply chain management, freeing clinicians to spend more time with patients. At the point of care, AI-driven clinical decision support integrates guidelines directly into practice, helping providers detect risks earlier and reduce variation in treatment. For patients, AI-enabled chatbots and digital companions offer information, reminders, and health education in ways that feel personal, accessible, and adaptive to local languages and contexts.

Yet alongside these opportunities come significant risks. AI systems can produce errors, replicate biases in their training data, and create dependency if not managed carefully. Data privacy and security are central concerns, particularly where sensitive health information is involved. Regulation and oversight remain uneven across Africa, raising questions about accountability and trust. Without strong ethical safeguards, AI could exacerbate inequities rather than reduce them.

What makes the African context distinct is the opportunity to leapfrog into AI-driven solutions without being weighed down by entrenched legacy systems. By focusing on context-specific innovation, such as language-adapted patient tools and clinician-facing systems aligned with local disease burdens, African health systems can pioneer practical, low-cost applications of AI that directly address frontline realities. At the same time, collaboration across organizations, engagement with regulators, and a steadfast commitment to human-centered care are critical to ensure that technology augments rather than replaces the relationships at the heart of healthcare.

AI in healthcare is about reshaping how health systems deliver safe, compassionate, and equitable care. With the right balance of innovation, ethical guardrails, and community engagement, Africa has the potential not only to adopt AI but to define a global model of responsible, inclusive, and sustainable digital transformation in health.

Key Themes

Administrative and back-office applications of AI are reshaping how health systems function. Tools such as ambient scribes reduce the clerical burden by transcribing consultations and generating structured notes, while machine learning supports predictive stock management and claim adjudication. Appointment reminders are also being reimagined through natural language processing to feel more personal and engaging. Yet challenges remain, particularly in multilingual African contexts where code-switching and local languages can cause current tools to fail, highlighting the need for locally adapted solutions.

Clinician-facing tools are emerging as powerful aids in decision-making, especially in resource-constrained settings. Clinical decision support systems now integrate guidelines directly into electronic medical records, ensuring that best practices are embedded at the point of care. At Penda Health, this has evolved from simple rule-based prompts to AI Consult, a system that runs in the background to provide real-time guidance. With red, yellow, and green alerts, it helps clinicians identify risks, refine diagnoses, and avoid inappropriate treatments, improving adherence to evidence-based care by up to 50%.

Patient-facing AI tools are redefining how individuals interact with health systems. Unlike “Dr. Google,” these platforms are context-sensitive, able to converse in local languages and adapt to patient preferences. Examples include Penda Health’s ChatnaPenda WhatsApp service, which integrates AI for direct patient support, and Jakaranda’s Swahili-based antenatal prompts. WHO’s S.A.R.A.H avatar also demonstrates how natural voice and interactive interfaces can deliver accessible health education. These tools expand patient autonomy by offering second opinions and guidance, though their safe use requires careful oversight.

As AI spreads through healthcare, risks and guardrails become critical. Hallucinations, biases, and limitations in local data pose threats to reliability. Patient privacy, consent, and confidentiality must remain non-negotiable, especially when sensitive conversations are transcribed or shared. Regulation in Africa is still evolving, creating a tension between rapid innovation and patient protection. Accountability is a major unresolved question: when errors occur, responsibility could fall on clinicians, developers, or health systems. Without clear governance, trust in AI-enabled care may be undermined.

Applied analytics powered by AI is unlocking new possibilities in health systems research and monitoring. Tools can process vast, complex datasets rapidly, from cost analyses to multi-county impact studies. They also accelerate qualitative analysis, transcribing and coding large amounts of text data at scale, while still requiring human oversight. In clinical contexts, AI is supporting diagnostic accuracy through image analysis in radiology and pathology, even making advanced scans usable at community health worker level. By transforming both quantitative and qualitative data use, AI is reshaping evidence generation for decision-making.

Ethical considerations remain at the heart of AI in health. Technology must enhance rather than replace human relationships, respecting patient dignity and ensuring care remains person-to-person. Informed consent is strengthened when AI enables communication in a patient’s preferred language or illustrates complex treatments through simulations, but risks arise if transparency is lost. Biases in training data can entrench inequities, particularly against marginalized communities. Privacy, confidentiality, and accountability must be safeguarded, ensuring that AI supports—not substitutes—the trust, empathy, and moral judgment that underpin healing.

Africa has a unique opportunity to lead in the AI-driven healthcare revolution. Unlike markets burdened by entrenched legacy systems, African health systems can leapfrog directly into context-specific applications that address real-world challenges, from language barriers to workforce shortages. The challenge for health systems is to identify practical, often low-cost AI tools that can be piloted within months to solve real problems, building momentum through rapid experimentation. Progress also depends on strong collaboration and knowledge-sharing across organizations, as well as advocacy with regulators to preserve the flexibility to innovate while ensuring protective oversight.

By combining agility in experimentation with strong ethical safeguards, Africa can chart a path that is both innovative and inclusive, setting global examples for human-centered, equitable, and sustainable AI in healthcare.

Post-Presentation Discussion

One recurring concern is whether AI tools extend consultation times, reducing efficiency. Evidence from Penda Health shows that using AI Consult adds about two minutes to the consultation process. While this initially worried clinicians, those extra minutes enable better history-taking, more comprehensive differential diagnoses, and more human connection with patients. Far from being wasted time, this small increase can be the difference between missed information and life-saving care. Over time, clinicians have come to value the tool so highly that many now struggle to work without it.

The accuracy of AI-generated recommendations also came up as a key area of interest for healthcare workers. It was emphasized that AI cannot replace clinical acumen; it only supports it. The principle of “garbage in, garbage out” applies; if a clinician records incomplete or poor-quality information, the AI output will be limited or misleading. To address this, prompt engineering and contextual customization are critical. By embedding guidelines for local conditions such as malaria, HIV, and IMNCI protocols, Penda ensures that AI recommendations remain relevant, safe, and aligned with Kenya’s healthcare realities.

Questions about data security and privacy highlighted the need for trust and regulatory alignment. In Penda Health’s case, all identifiable data remains on their servers, while only de-identified data is transmitted through external APIs such as OpenAI. This ensures compliance with Kenya’s Data Protection Act and prevents patient data from being used for model training. However, the discussion also raised whether Africa should advocate for de-identified local data to contribute to training datasets, ensuring global AI tools reflect African health realities rather than importing biases from other contexts.

The issue of cost was also examined. Additional marginal cost is considered a worthwhile investment because it reduces diagnostic errors and strengthens patient trust. From a patient’s perspective, the small additional cost is outweighed by the greater value of safer, more accurate, and more reliable care. This framing positions AI not as an unnecessary luxury but as a cost-effective enabler of quality.

The discussions also explored how to balance patient preferences with professional responsibility. Some patients may request care based on AI outputs or online searches, but clinicians remain accountable for final decisions. Co-production in this context means respecting patient views, explaining clinical reasoning, and making decisions together. Doctors cannot abdicate responsibility to AI or patient demands, but they must integrate these perspectives into shared decision-making. This balance ensures that AI augments, rather than undermines, professional accountability and patient trust.

Broader concerns around cyber risks and professional dependency were also addressed. While AI systems raise new questions, the panel stressed that the main vulnerabilities lie in broader internet use and digital practices, not the AI tools themselves. On dependency, the consensus was that AI will inevitably shift how clinicians work, just as mobile phones transformed communication. The key is to treat AI as an assistant rather than a master, building safeguards and resilience so that clinicians remain capable even if systems fail. Ethical risk management is therefore critical as adoption expands.

The discussion also emphasized that AI in healthcare is here to stay, and the challenge is how to use it responsibly. Collaboration, regulatory engagement, and open sharing of lessons will be key to ensuring AI enhances human-centered care rather than eroding it. The guiding principle is not just efficiency or innovation, but making healthcare kinder, safer, and more co-produced with patients and communities.

From Hype to Human-Centered Transformation

AI in healthcare is not about replacing people with machines but about reimagining how health systems work to be smarter, safer, and more compassionate. Administrative efficiencies, clinical decision support, and patient-facing tools show the promise of AI to reduce burdens and expand access. Yet the true test is whether these innovations strengthen trust, equity, and human connection. In Africa, the opportunity is to develop context-driven, ethical, and inclusive solutions. With collaboration, regulation, and a commitment to values, AI can become less of a distant revolution and more of a daily practice, helping health systems dance in step with patients and communities toward better care for all.

Key Session Highlights

Breakthrough Performance of AI in Healthcare

Rapid advances in large language models have reached unprecedented-level performance in diagnostics, opening new opportunities for integration into care.

Augmenting Clinicians, Not Replacing Them

AI decision support can improve adherence to guidelines and reduce errors, but clinicians remain responsible for final judgments and patient care.

Privacy and Accountability Concerns

Use of patient data with AI raises critical questions around confidentiality, liability, and responsibility when errors occur.

Addressing Bias and Equity Risks

AI systems reflect the data they are trained on; without inclusive inputs, they risk reinforcing inequities in access and prioritization.

Ethics and Care Relationships

Protecting patient dignity, strengthening human relationships, and ensuring informed consent remain central as AI enters care settings.

Language and Local Context Matter

Current AI tools periodically struggle with multilingual, code-switching environments, underscoring the need for localization to make them effective in African health systems.

Feedback from participants

“Doctors should spend more time .We are social beings and we need each other especially when on our low points”

“We are in low income setting countries so what measure can be taken to those rural community where there is poor networking and no electrical power to AI”

quotes from the keynote speakers and panelists

Key Session Takeaways

Artificial Intelligence has immense potential in healthcare, but only if it’s equitable. When AI systems are trained on biased data, they can reinforce existing inequalities. Ensuring inclusion in AI design is key to ethical, people-centered care.

Key Takeaways

The quality and diversity of training data directly determine how fair and accurate AI decisions are.

Healthcare technologies should be designed to serve all communities, not just those best represented in data.

Developers and health professionals must align AI use with ethical principles of justice and fairness.

Recent advances in large language models show performance levels rivaling and even surpassing expert physicians in diagnostic tasks; opening new possibilities for care delivery, decision support, and medical education.

Key Takeaways

Large language models have achieved remarkable improvements in medical reasoning and diagnostics.

AI can assist healthcare workers in decision-making, improving accuracy and efficiency.

As AI advances, ethical oversight and transparent integration into healthcare systems are vital.

As AI begins to shape healthcare, key questions arise around bias, privacy, and regulation. Ensuring these tools are localized, transparent, and responsibly deployed will be crucial in balancing innovation with patient trust and safety in the evolving digital health era.

Key Takeaways

Even as technology improves, models can misrepresent or misinterpret information, requiring ongoing human oversight.

What works in one region may not fit another. AI must reflect local language, culture, and healthcare systems.

Protecting patient data and clarifying when AI is used strengthens accountability.

As AI tools become part of care delivery, doctors face new dynamics in shared decision-making. While patients bring valuable insights and preferences, clinicians must balance these with professional judgment.

Key Takeaways

Effective care involves collaboration between patient and clinician, guided by mutual respect and understanding.

AI can inform decisions, but medical expertise determines the safest and most ethical course of action.

Explaining the reasoning behind clinical decisions builds trust and reduces misunderstandings.

AI tools are helping healthcare workers gain insights faster and more efficiently. Yet, human oversight remains vital to ensure accuracy, context, and the quality of insights drawn.

Key Takeaways

Automated tools can accelerate data analysis and reporting without compromising depth.

Technology should complement expert judgment and quality assurance.

Technology should complement—not replace—expert judgment and quality assurance

Africa has the potential to lead in AI-driven healthcare by starting small, fostering collaboration, and maintaining human-centered care.

Key Takeaways

Explore simple, low-cost AI tools to address everyday workflow challenges.

Sharing experiences and insights accelerates responsible innovation.

Sharing experiences and insights accelerates responsible innovation.

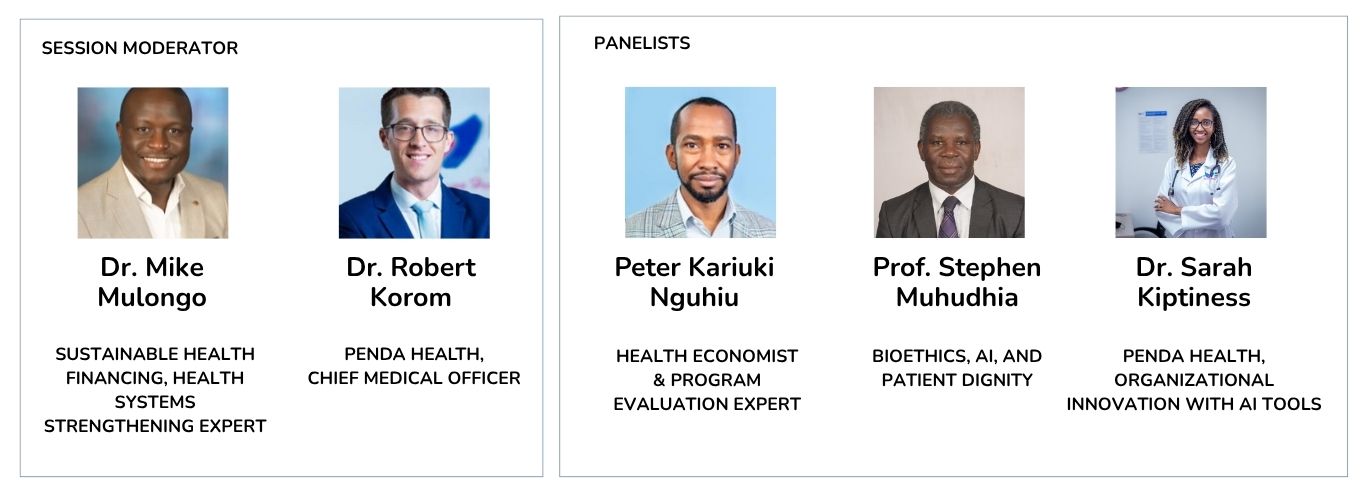

Live Recording, Speakers and Panelists

Exploring Generative AI’s Role in Enhancing Quality of Care: Exploring How Technology is Enabling Human-Centered Communication, Consent, and Care

The future of AI in healthcare will be shaped not only by technology but by the ideas and experiences of those who use it every day. We invite you to share your reflections, challenges, and examples of how AI tools are being applied in your health systems. By learning from each other, we can build safer, more inclusive, and human-centered approaches to digital transformation in Africa and beyond.

Join us in working towards more responsive, data-led health systems across Africa.

Email us: [email protected]

Connect with us on our social media platforms: